It’s quite a while now since I was first involved with Computer System Validation (CSV). It was around 1994 if I recall correctly and it was during a large project for AstraZeneca that I cut my teeth on the computer system lifecycle approach to all things regulated.

So what is CSV and why is it so especially important to the pharmaceutical industry?

To answer what CSV is I like to think back to a conference I attended in the 1990’s where one of the presenters was an American by the name of Ken Chapman. Ken was an inspirational person and had spend a huge part of his career working for Pfizer during which he developed computer system validation processes for the company while also sitting on a number of external pharmaceutical special interest boards as reviewer and advisor. I bring Ken’s name up because during the conference he dealt with a number of issues one of which If I recall correctly was regarding Electronic Signatures and Records (referred to colloquially within the industry as 21 CFR Part 11).

However, at some point during the conference Ken simplified the whole validation process to the whole audience by writing down what Validation is – I’ll never forget the three lines of text he showed us:-

- Say what you are going to do. Specify and document your requirements and how they will be met.

- Do what you said you would do. Develop and build the system according to the specifications you have written.

- Prove you did what you said you would do. Test the system according to the specifications you have written.

Put like this it all seems so simple doesn’t it. Essentially it is a simple process in itself but I do have to say that the pitfalls of validation are the extents that some people expect of the validation process which is a bit like asking for an extra hot latte at your local coffee shop – what do you exactly mean by extra hot (please define).

So how do you go about validating a computer system? Well first I would advise as to what parts of the system are to be validated. For instance if the production process requires that a compressed air system is provided then does it mean that the compressor software itself (normally provided by the OEM) is also validated? I think the argument here is how does the compressor software affect the finished product (i.e. would the operation of the air compressor by itself affect the end product’s quality attributes?). So although you may require the manufacturer to provide assurances that they have used a quality process to develop the compressor software it would not necessarily need to be validated as further upstream software would cover these requirements. What I mean by this is that lets say for parts of the process that require the use of compressed air (such as line pressure monitoring) would be validated as the critical parameter in this instance is likely to be the compressed air pressure entering the system. Another example of where CSV is normally limited is with regards to operating systems. This where the size and prevenance of the operating system weighs heavily in terms of this situation. For example by far the largest and most common operating system utilised by control systems today is Microsoft Windows. The attitude of the regulatory authorities is that the development of such a widely used operating system does not require by itself to be independently validated, rather because of such a large (and therefore proven) user base then this is not required. This is in some ways also similar to the example of the air compressor where the affect of the overall control system is dependant upon a range of systems but where in fact the actual validation of the system is covered because the overall testing process will cover the effective operation of the system. Shortlisting the areas of the system that actually need validating is important in regards to the overall validation time, effort, practicality and cost.

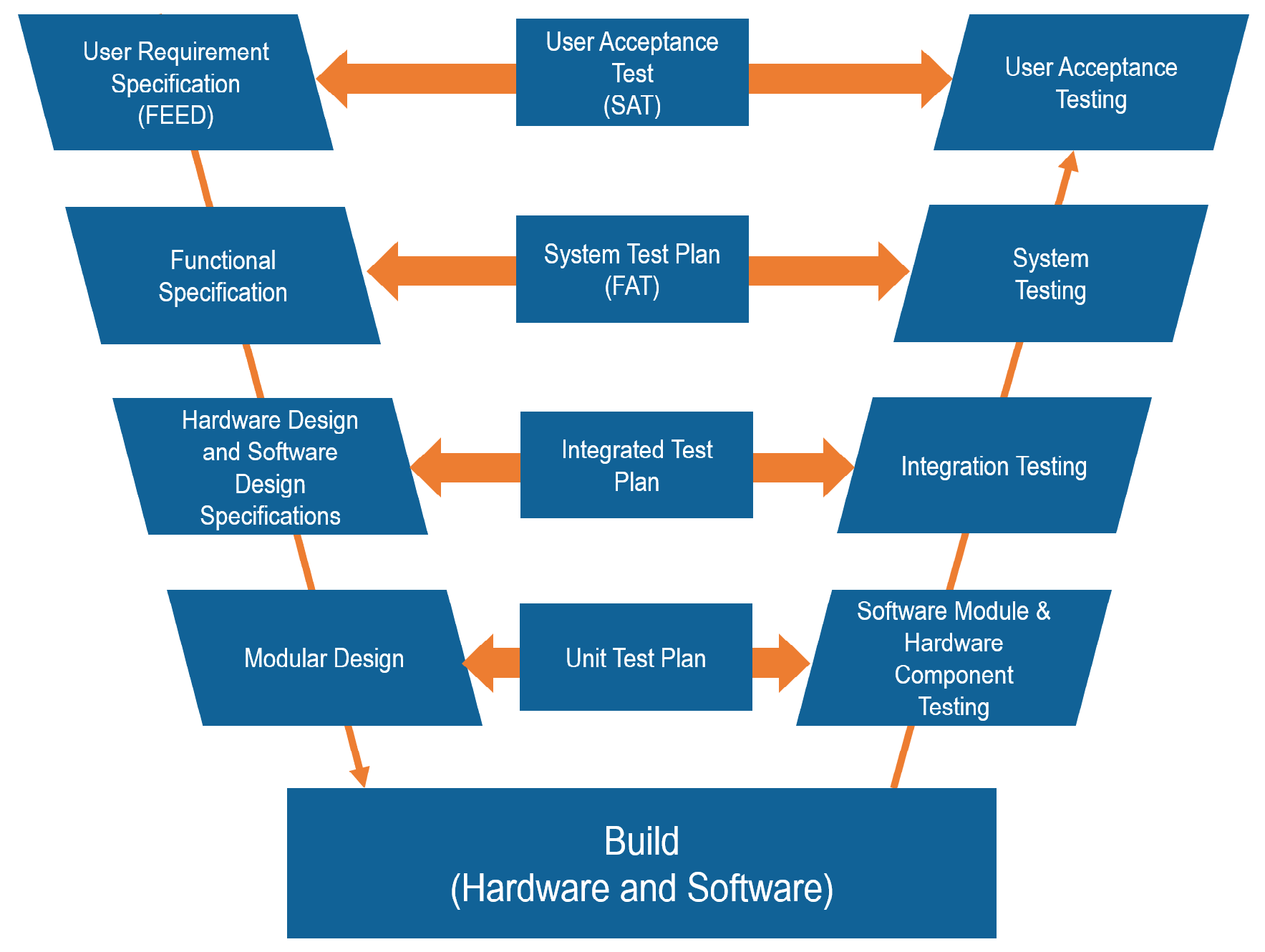

So is there a process or strategy involved in validating a computer system? The answer is that there are models and guidelines. The most popular model is known as the ‘V’ model. This is where the various documents defining the system are mapped against each other and the various test procedures. The idea is that the end user requirements are captured in the very beginning and are then used to ensure that all future documentation and requirements are included in the ensuing specifications. Figure 1 below shows the generic ‘V’ model. A small point but please note that the model gets its name from the ‘V’ shape of the model and that the ‘V’ doesn’t actually stand for Validation.

Now that we have an overall model that can shape the order and relationship of the various specifications and test scripts we can begin to consider the documents to be produced and the content therein. Being an engineer I like the ideas of frameworks and guidelines and as luck would have it the ISPE (International Society of Pharmaceutical Engineering) have produced an extremely useful guideline regarding computer system validation which is directly applicable to control systems – the guide is known as GAMP (Good Automated Manufacturing Practice). It is currently on its 5th iteration and contains a wealth of information and guidance as to how to validate a computer system. It also describes the documents required together with example content lists for each document. So I strongly recommend anyone venturing into the wacky world of CSV to pick up a copy of the GAMP guidelines and save yourself hours of toil wondering just how to carry out the validation process.

Before I finish this article there is something that I would like to mention about software in particular. It seems as though standards developers in batch circles and those involved in defining validation systems are of similar minds. Indeed, this is a very good example of good practice for all concerned. In batch circles the de-facto standard is known as ISA S88 which is used for defining batch terminology and processes (there is also the ISA 95 standard for defining the integration between enterprise planning system with control systems). Both the ISA S88 and ISA S95 standards reference the importance and use of software modularity. In fact the ISA S88 standard also defines the hierarchy of company structures using such terms as Enterprise (to define a global company), a plant (an individual manufacturing site), an area (a manufacturing area within the plant such as a tank farm), a Unit (such as a Reactor or Centrifuge) and a control module (a number of devices that work together to provide a specific function such as an agitation). In this regard the idea is that a software system is split into a hierarchy of smaller software modules. The idea is that it is easier (and more efficient) to provide software functionality in multiple modules than in one large piece of contiguous software. This makes sense in all sorts of ways, not least in that it is easier to specify the function of a system piece by piece (module by module). This also makes it easier to test the functionality of each piece separately before it being joined to the other software modules. Harking back to the ‘V’ model above, you will see that software modules and module tests are part of the validation process and where an integration test is used to test the system as a whole. Another benefit of this system results in only modules that share information or lines of command are joined together – thus reducing the complexity and the amount of information transfer or shared between one another and the resulting communications traffic.

Finally ( I know I said “Before I finish” just before) but please bare with me. You may think that the above validation process is somewhat long winded. However the philosophy behind the process is not only to provide a validated system but also to provide an efficient way of developing computer based hardware and software systems. The benefit of the approach is to provide a well documented and validated system. Once documented it is easier to maintain (and modify if ever required in the future). The approach (since it is modular) also enables multiple developers to work in parallel thus reducing the overall development time of the system. The process also lends itself to producing software in libraries for future re-use. Reusable software is extremely useful and from personal experience I recall reusing a colleague’s reporting software that originally took over 6 months to develop to only taking 3 days to modify and reuse in another application.